Readying the Cluster For Work

In the last post, I shared the playbook for initializing the cluster and the last step was to deploy the metallb, traefik, and longhorn roles. These roles deploy a load balancer, an ingress controller, and a storage provisioner for the cluster.

Metallb Load Balancer Role

When a service is deployed into the cluster of the type LoadBalancer, Kubernetes expects the cluster load balancer to assign an external IP address. Cloud providers such as AWS, Google, and Azure provide a load balancer component which provisions a IP address and routes the traffic within the cloud to the destination cluster. When hosting a Kubernetes on bare metal, I need to supply my own load balancer. Enter metallb.

I just need to provide a range of IP addresses for the load balancer to use for any services which require an external IP address. I’ve excluded a range of 10 IP addresses from my router’s DHCP pool to prevent any of those addresses from being used for other devices.

The metallb role establishes a pattern for other roles, using Ansible to login to one of the master nodes in order to use the Kubernetes Ansible and helm modules with the k3s credentials stored on the node to install and configure the components.

Add variables to inventory/group_vars/k3s_cluster:

metallb_version: "0.10.3"

roles/k3s_cluster/metallb/tasks/main.yml:

- name: Install Kubernetes Python module

pip:

name: kubernetes

- name: Install Kubernetes-validate Python module

pip:

name: kubernetes-validate

- name: Deploy MetalLB Namespace Manifest

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ item }}"

validate:

fail_on_error: yes

with_items: '{{ lookup("url", "https://raw.githubusercontent.com/metallb/metallb/v{{ metallb_version }}/manifests/namespace.yaml", split_lines=False) | from_yaml_all | list }}'

when: item is not none

run_once: true

delegate_to: "{{ ansible_host }}"

- name: Deploy MetalLB Manifest

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ item }}"

validate:

fail_on_error: yes

with_items: '{{ lookup("url", "https://raw.githubusercontent.com/metallb/metallb/v{{ metallb_version }}/manifests/metallb.yaml", split_lines=False) | from_yaml_all | list }}'

when: item is not none

run_once: true

delegate_to: "{{ ansible_host }}"

- name: Deploy MetalLB Configmap

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/configmap.j2') }}"

validate:

fail_on_error: yes

delegate_to: "{{ ansible_host }}"

run_once: true

roles/k3s_cluster/metallb/templates/configmap.j2:

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- xxx.xxx.xxx.240-xxx.xxx.xxx.249

If needed, the following playbook can be run to make changes to metallb by itself without executing the rest of the roles:

- hosts: master

remote_user: ansible

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3

pre_tasks:

- name: Install Kubernetes Python module

pip:

name: kubernetes

- name: Install Kubernetes-validate Python module

pip:

name: kubernetes-validate

roles:

- role: k3s_cluster/metallb

Traefik Ingress Controller Role

An ingress object is a component which routes external traffic coming into the cluster to the correct internal service. An ingress controller is required to be deployed to manage ingress objects. The list of ingress controllers available for Kubernetes is quite extensive, but the k3s comes default with Traefik. We’re just going to do some additional customization. Note that the Traefik service is a load balancer service and requires an external IP (from metallb).

We’re going to configure the Traefik Ingress Controller to work with Cloudflare DNS to deploy a wildcard SSL certificate from Let’s Encrypt. This configuration will allow me to manage a single SSL certificate for domain.tld so that each application that I deploy will use a subdomain app.domain.tld.

Traefik needs a persistent volume to store the certificates. Originally, I deployed the Longhorn storage provisioner first and used a Longhorn persistent volume for this, but this led to a bit of a chicken-and-egg problem when I was troubleshooting a Longhorn stability problem. In order to restore Longhorn volumes after resetting the cluster, I needed to access the Longhorn dashboard. I couldn’t access the Longhorn dashboard without the Traefik certificate volume. I solved this problem by putting the certificate volume on an NFS share on the QNAP NAS.

This method of customizing the Traefik helm installation is specific to k3s and doesn’t use the Kubernetes Ansible module. The helm config file is placed onto master node and will be applied when k3s cluster is restarted. After the helm configuration is customized, then the ConfigMap is deployed for additional customization.

Add variables to inventory/group_vars/k3s_cluster:

# List of the haproxy IP addresses so we know if we can trust the http headers

traefik_proxy_trusted: "xxx.xxx.xxx.250/32,xxx.xxx.xxx.251/32,xxx.xxx.xxx.254/32"

traefik_hostname: traefik-dash.domain.tld

traefik_certs_nfs_path: /path/to/traefik-certs

traefik_certs_nfs_host: xxx.xxx.xxx.xxx

letsencrypt_domain0: "domain1.tld"

letsencrypt_domain1: "domain2.tld"

cloudflare_email: "[email protected]"

cloudflare_token: "api token"

authelia_cluster_hostname: authelia.authelia.svc

roles/k3s_cluster/traefik/tasks/main.yml:

- name: Traefik Config Customization

ansible.builtin.template:

src: manifests/traefik-config.j2

dest: /var/lib/rancher/k3s/server/manifests/traefik-config.yaml

owner: root

group: root

mode: "0644"

- name: Traefik ConfigMap

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/config.j2') }}"

validate:

fail_on_error: yes

run_once: true

delegate_to: "{{ ansible_host }}"

- name: Traefik Secrets

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/secrets.j2') }}"

validate:

fail_on_error: yes

run_once: true

delegate_to: "{{ ansible_host }}"

- name: Traefik Persistence Volumes - NFS

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ item }}"

validate:

fail_on_error: yes

with_items: "{{ lookup('template', 'manifests/volume.j2') }}"

when: item is not none

run_once: true

delegate_to: "{{ ansible_host }}"

- name: Traefik Dashboard

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/dashboard.j2') }}"

validate:

fail_on_error: yes

run_once: true

delegate_to: "{{ ansible_host }}"

roles/k3s_cluster/roles/traefik/manifests/traefik-config.j2:

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: traefik

namespace: kube-system

spec:

valuesContent: |-

additionalArguments:

- --providers.file.filename=/data/traefik-config.yaml

# For production

- --certificatesresolvers.cloudflare.acme.caserver=https://acme-v02.api.letsencrypt.org/directory

# For testing

#- --certificatesresolvers.cloudflare.acme.caserver=https://acme-staging-v02.api.letsencrypt.org/directory

- --certificatesresolvers.cloudflare.acme.dnschallenge.provider=cloudflare

- --certificatesresolvers.cloudflare.acme.dnschallenge.resolvers=1.1.1.1:53,8.8.8.8:53

- --certificatesresolvers.cloudflare.acme.storage=/certs/acme.json

- --serversTransport.insecureSkipVerify=true

- --api.dashboard=true

- --entryPoints.web.proxyProtocol=true

- --entryPoints.web.proxyProtocol.trustedIPs={{ traefik_proxy_trusted }}

- --entryPoints.web.forwardedHeaders=true

- --entryPoints.web.forwardedHeaders.trustedIPs={{ traefik_proxy_trusted }}

- --entryPoints.websecure.proxyProtocol=true

- --entryPoints.websecure.proxyProtocol.trustedIPs={{ traefik_proxy_trusted }}

- --entryPoints.websecure.forwardedHeaders=true

- --entryPoints.websecure.forwardedHeaders.trustedIPs={{ traefik_proxy_trusted }}

- --entrypoints.websecure.http.tls.certresolver=cloudflare

- --entrypoints.websecure.http.tls.domains[0].main={{ letsencrypt_domain0 }}

- --entrypoints.websecure.http.tls.domains[0].sans=*.{{ letsencrypt_domain0 }}

- --entrypoints.websecure.http.tls.domains[1].main={{ letsencrypt_domain1 }}

- --entrypoints.websecure.http.tls.domains[1].sans=*.{{ letsencrypt_domain1 }}

deployment:

enabled: true

replicas: 1

annotations: {}

# Additional pod annotations (e.g. for mesh injection or prometheus scraping)

podAnnotations: {}

# Additional containers (e.g. for metric offloading sidecars)

additionalContainers: []

# Additional initContainers (e.g. for setting file permission as shown below)

initContainers:

# The "volume-permissions" init container is required if you run into permission issues.

# Related issue: https://github.com/containous/traefik/issues/6972

- name: volume-permissions

image: busybox:1.31.1

command: ["sh", "-c", "chmod -Rv 600 /certs/*"]

volumeMounts:

- name: data

mountPath: /certs

# Custom pod DNS policy. Apply if `hostNetwork: true`

# dnsPolicy: ClusterFirstWithHostNet

service:

spec:

externalTrafficPolicy: Local

ports:

web:

redirectTo: websecure

env:

- name: CF_DNS_API_TOKEN # or CF_API_KEY, see for more details - https://doc.traefik.io/traefik/https/acme/#providers

valueFrom:

secretKeyRef:

key: apiToken

name: cloudflare-apitoken-secret

persistence:

enabled: true

existingClaim: traefik-certs-vol-pvc

accessMode: ReadWriteMany

size: 128Mi

path: /certs

volumes:

- mountPath: /data

name: traefik-config

type: configMap

roles/k3s_cluster/traefik/manifests/config.j2:

apiVersion: v1

kind: ConfigMap

metadata:

name: traefik-config

namespace: kube-system

data:

traefik-config.yaml: |

http:

middlewares:

headers-default:

headers:

sslRedirect: true

browserXssFilter: true

contentTypeNosniff: true

forceSTSHeader: true

stsIncludeSubdomains: true

stsPreload: true

stsSeconds: 15552000

customFrameOptionsValue: SAMEORIGIN

customRequestHeaders:

X-Forwarded-Proto: https

roles/k3s_cluster/traefik/manifests/volume.j2:

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: traefik-certs-vol-pv

namespace: kube-system

spec:

capacity:

storage: 128Mi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: {{ traefik_certs_nfs_path }}

server: {{ traefik_certs_nfs_host }}

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: traefik-certs-vol-pvc

namespace: kube-system

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs

volumeName: traefik-certs-vol-pv

resources:

requests:

storage: 128Mi

roles/k3s_cluster/traefik/manifests/secrets.j2:

---

apiVersion: v1

kind: Secret

metadata:

name: cloudflare-apitoken-secret

namespace: kube-system

type: Opaque

stringData:

email: {{ cloudflare_email }}

apiToken: {{ cloudflare_token }}

roles/k3s_cluster/traefik/manifests/dashboard.j2:

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: traefik-dashboard

namespace: kube-system

spec:

entryPoints:

- websecure

routes:

- match: Host(`{{ traefik_hostname }}`) && (PathPrefix(`/dashboard`) || PathPrefix(`/api`))

kind: Rule

services:

- name: api@internal

kind: TraefikService

middlewares:

- name: authme

namespace: kube-system

---

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: authme

namespace: kube-system

spec:

forwardAuth:

address: http://{{ authelia_cluster_hostname }}/api/verify?rd=https://{{ authelia_hostname }}

trustForwardHeader: true

The Traefik dashboard does not natively have it’s own authentication system. The Middleware object will check with Authelia to see if the user has been authenticated and is authorized to view the dashboard. If the user is not authenticated, Authelia will attempt to authenticate them. I’ll cover OpenLDAP and Authelia in detail in a future post.

Finally, a standalone playbook can be used to execute the role by itself.

k3s-traefik.yml:

- hosts: master[0]

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3

remote_user: ansible

pre_tasks:

- name: Install Kubernetes Python module

pip:

name: kubernetes

- name: Install Kubernetes-validate Python module

pip:

name: kubernetes-validate

roles:

- role: k3s_cluster/traefik

Longhorn Storage Role

Longhorn is a Kubernetes native distributed block storage provided by Rancher for bare metal clusters. The volumes are replicated across multiple nodes and supports features such as snapshots, backups, and disaster recovery from an S3-compatible storage or NFS server.

I’ll need to start with the new variables. I’ve specified an NFS path on my NAS which I want to use for Longhorn volume backups. The entire NAS is backed up offsite to Backblaze B2.

The Longhorn dashboard needs to be available quite early in the process before I have any other workloads available. This leads to another chicken-and-egg problem that needs to be solved similar to the the one with the Traefik SSL certificates. Instead of using Authelia for authentication, I used plain old basic authentication.

The string required to set a user and password is achieved using the htpasswd command:

$ htpasswd -nb admin password | openssl base64

YWRtaW46JGFwcjEkWEZ2SXV0TFMkNlIzTFVRbjM3WVNrU0pDZG9ndGhSMAoK

NOTE: It should be obvious, but don’t use password as a password.

inventory/group_vars/k3s_cluster:

longhorn_backup_target: "nfs://xxx.xxx.xxx.xxx:/path/to/longhorn-backup"

longhorn_version: "1.3.1"

longhorn_user1: "YWRtaW46JGFwcjEkWEZ2SXV0TFMkNlIzTFVRbjM3WVNrU0pDZG9ndGhSMAoK"

roles/k3s_cluster/longhorn/tasks/main.yml:

- name: Longhorn Namespace

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/namespace.j2') }}"

validate:

fail_on_error: yes

delegate_to: "{{ ansible_host }}"

run_once: true

- name: Longhorn Default settings

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/defaultsettings.j2') }}"

validate:

fail_on_error: yes

delegate_to: "{{ ansible_host }}"

run_once: true

- name: Longhorn Deployment

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ item }}"

validate:

fail_on_error: no

strict: no

with_items: '{{ lookup("url", "https://raw.githubusercontent.com/longhorn/longhorn/v{{ longhorn_version }}/deploy/longhorn.yaml", split_lines=False) | from_yaml_all | list }}'

when:

- item is not none

- item.metadata.name != 'longhorn-default-setting'

run_once: true

delegate_to: "{{ ansible_host }}"

- name: Longhorn Storage Class

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/storageclass.j2') }}"

validate:

fail_on_error: yes

delegate_to: "{{ ansible_host }}"

run_once: true

- name: Longhorn RecurringJobs

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/recurringjobs.j2') }}"

validate:

fail_on_error: yes

delegate_to: "{{ ansible_host }}"

run_once: true

- name: Longhorn Ingress

kubernetes.core.k8s:

kubeconfig: "/var/lib/rancher/k3s/server/cred/admin.kubeconfig"

state: present

definition: "{{ lookup('template', 'manifests/ingress.j2') }}"

validate:

fail_on_error: yes

delegate_to: "{{ ansible_host }}"

run_once: true

roles/k3s_cluster/longhorn/manifests/namespace.j2:

ind: Namespace

apiVersion: v1

metadata:

name: longhorn-system

roles/k3s_cluster/longhorn/manifests/defaultsettings.j2:

apiVersion: v1

kind: ConfigMap

metadata:

name: longhorn-default-setting

namespace: longhorn-system

data:

default-setting.yaml: |-

backup-target: "{{ longhorn_backup_target }}"

default-replica-count: 3

default-data-locality: disabled

default-longhorn-static-storage-class: longhorn-static

priority-class: system-cluster-critical

auto-delete-pod-when-volume-detached-unexpectedly: true

node-down-pod-deletion-policy: delete-both-statefulset-and-deployment-pod

roles/k3s_cluster/longhorn/manifests/storageclass.j2:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: longstore

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: driver.longhorn.io

allowVolumeExpansion: true

reclaimPolicy: Delete

volumeBindingMode: Immediate

parameters:

numberOfReplicas: "3"

staleReplicaTimeout: "2880"

fromBackup: ""

fsType: "ext4"

roles/k3s_cluster/longhorn/manifests/recurringjobs.j2:

---

apiVersion: longhorn.io/v1beta1

kind: RecurringJob

metadata:

name: snapshot-1

namespace: longhorn-system

spec:

cron: "05 15 * * *"

task: "snapshot"

groups:

- default

retain: 1

concurrency: 1

labels:

snap: daily

---

apiVersion: longhorn.io/v1beta1

kind: RecurringJob

metadata:

name: backup-1

namespace: longhorn-system

spec:

cron: "45 23 * * *"

task: "backup"

groups:

- default

retain: 5

concurrency: 2

labels:

backup: daily

roles/k3s_cluster/longhorn/manifests/ingress.j2:

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: longhorn-ingressroute

namespace: longhorn-system

spec:

entryPoints:

- web

- websecure

routes:

- match: Host(`longhorn.domain.tld`)

kind: Rule

services:

- name: longhorn-frontend

port: 80

middlewares:

- name: longhorn-auth-basic

namespace: longhorn-system

---

# Longhorn UI users

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: longhorn-auth-basic

namespace: longhorn-system

spec:

basicAuth:

secret: authsecret

realm: longhorn

---

apiVersion: v1

kind: Secret

metadata:

name: authsecret

namespace: longhorn-system

data:

users: |2

{{ longhorn_user1 }}

The standalone playbook for the Longhorn looks similar to the others.

k3s-longhorn.yml:

---

- hosts: master[0]

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3

remote_user: ansible

pre_tasks:

- name: Install Kubernetes Python module

pip:

name: kubernetes

- name: Install Kubernetes-validate Python module

pip:

name: kubernetes-validate

roles:

- role: k3s_cluster/longhorn

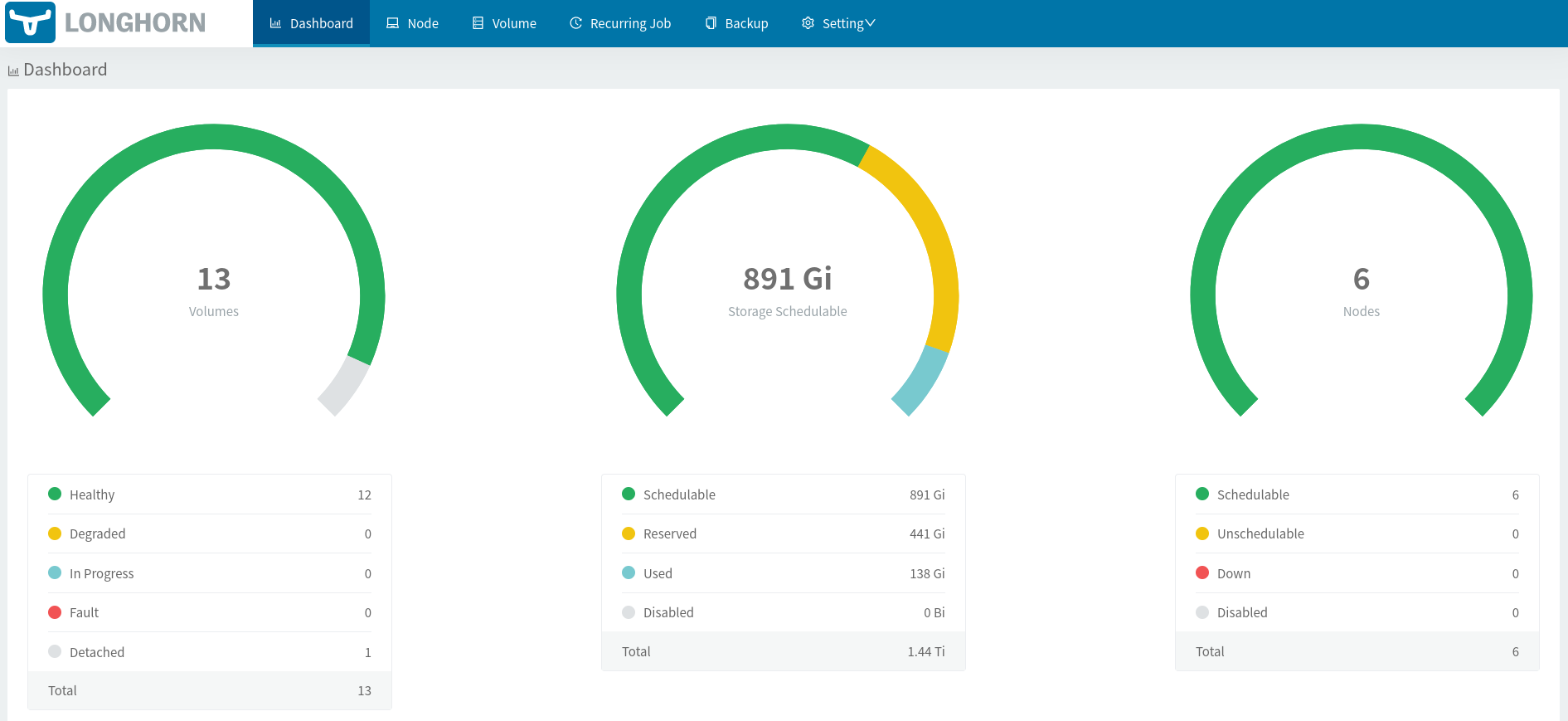

At this point, I’ve created a storage class which will provision a persistent volume using Longhorn and replicated across multiple nodes. A backup and a snapshot of any volumes will be created every day by default at the NFS location. These backups can be restored through the Longhorn dashboard.

With all of the applications deployed, my dashboard looks like this as of this post:

In my next post, I will cover a few more foundational components which will be needed before I start deploying end user applications. Since I’m self-hosting on my home Internet with a dynamic IP address, I need to a way to update the DNS entries at Cloudflare when my external IP address changes. I will also need to deploy cert-manager to manage cluster certificates other than the ingress certificates managed by Traefik. I can then deploy OpenLDAP and Authelia for authentication with two-factor, authorization, and single sign-on capabilities.